Projects

Current

Save 58

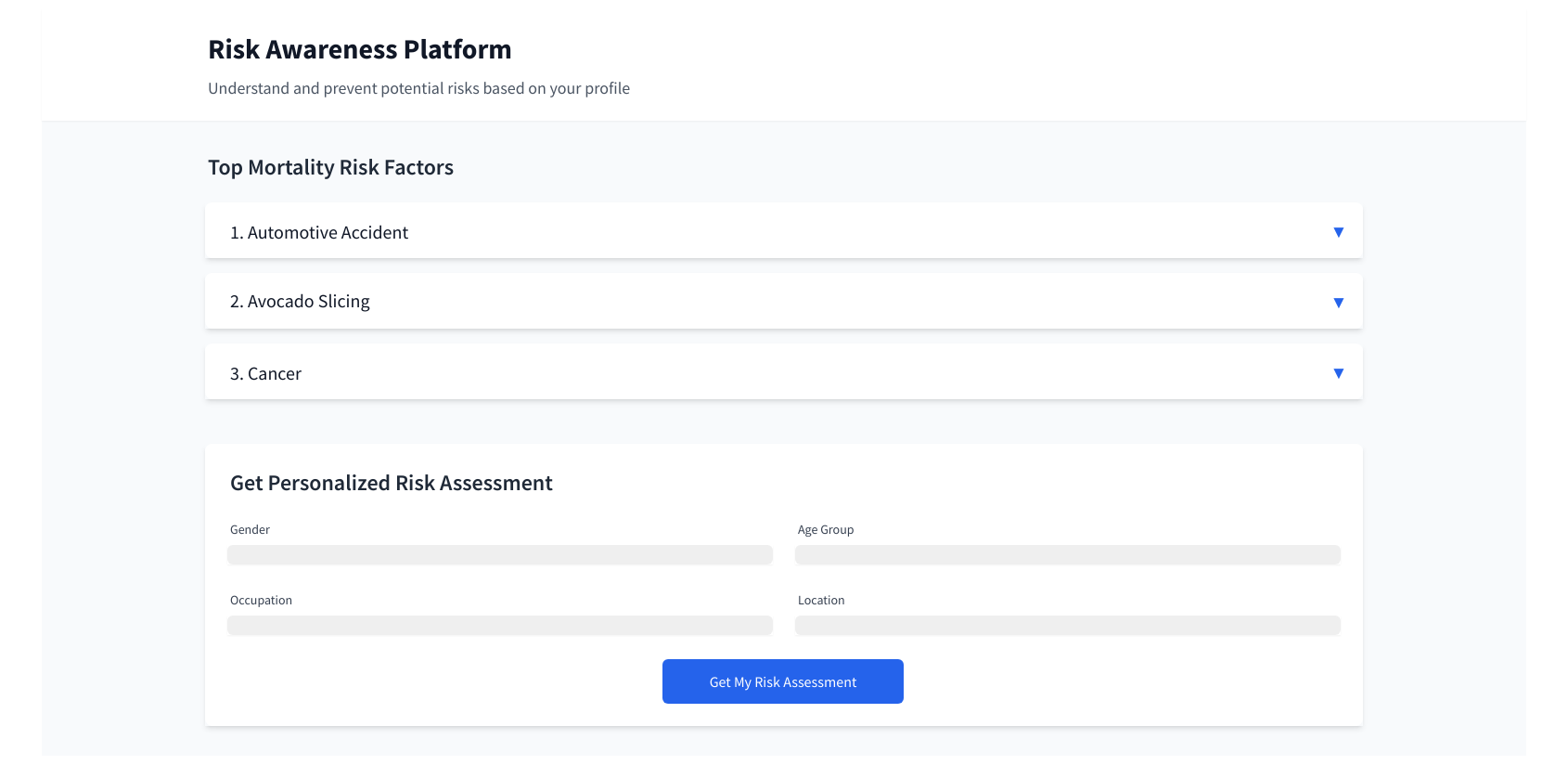

Inspiration: Kurzgesagt – In a Nutshell: This Video Might Save 58 Lives Next Week

Hypothesis: Can an automated message (email, tweet, slack, video) save lives?

Premise: Can providing statistically likely causes for death or injury for a specific person's demographic (age, gender, profession) combined with current conditions (date, geography, weather) result in that person being mindful of the particular danger to save their life? Pros:

- This is a deceptively hard problem involving multi dimensional. big data. It could easily keep intern teams occupied for several years

- Decomposable. Its possible to focus this project into smaller, month long deliverables that are challenging.

- Motivational mission. It feels good to do some good.

- Potentially marketable as doing good

Cons:

- Delicate topics for the work place. See the video.

- Not profitable without turning people into the product.

Business Value

- Data science tooling

- iceburg

- developing a model

- Data/ETL

- repeatable data scraping

- automated data evaluation

- New technologies

- sveltekit

- nix/flake

- tauri

- multi dimension database

- automated web scrapping

- biome

Next steps

- Week 0

- Evaluate data

- Boot strap project

- Repo

- monorepo

- think about directory structure for data, server, client

- Tooling: direnv / nix flake <- Daniel or Kyle?

- Typescript/Sveltekit

- tooling/one off scripts can be polyglot

- Linter/formatter - biome

- Postgresql docker?

- CI - github actions

- README

- Repo

- Management

- Assign primary mentors

- pick days in office

- Laptops - MCD acting

- github/slack access

- Assign primary mentors

- Impact map/Stories

- Review notes

- Need more challenges

- Week 1

- The missing semester

- Review the first six chapters

- Command-line Environment and Version Control (Git) will be really helpful

- Ask lots of questions

- link 4cs

- Tool setup

- Checkout github repo. Work through README

- Ask fellow mojos! #internship #engineering

- First commit?

- Discovery

- The missing semester

- Week 2 - atomic commits / unraveling git commit

- auth

- Implement boilerplate design? Jesse has a headless UI library he likes

- Anonymous user landing page

- Authenticated user page

- privacy policy

- Scraping/Data collection

- Aggregate data

- Automate import into database

- unit test / some web output for

Week before day light savings time, "start shifting your schedule by 15 minutes earlier/later. heart attacks and car crashes increase after day light savings time due to sleep deprivation"

- video parity

- 3 million people will watch this video

- 15-35

- living in western countries

- driving

- 8 in car crash next week. 416 of you over the next year. 2m29s

- 2: 30% from speeding

- 2: 25% from drinking and driving

- 1: from distracted

- 3: from not wearing a seat belt

- 26 by falling in the next year. 5m17s

- scaffolding, ladders, hiking

- 1 of you will drown next week. 6m07s

- over estimate swimming abilities

- go into the water drunk

- cruise ships

- 10 of you will die from self harm next week 7m12s

- crisis situations triggered by traumatic events and extraordinary situations

- resources for help

- 5 of you will from cancer next week 8m52s

- regular checkups and screenings

- sunscreen

- 8 in car crash next week. 416 of you over the next year. 2m29s

- sharing

- 3 million people will watch this video

- Week 3

- Ticket work.

- Week 4

- Ticket work.

Shallow vs deep

Shallow is a first step to get something working. Deep is a thorough tech investigation

Shallow

- Json / csv in git

- Manual algorithm

- Web app only

- Manual notification trigger via web

- Manual data scrapping

Deep

- Apache iceburg for storage sharing. Data lake. <- Eric Gibb

- Multi dimension data in postgresql / vector storage

- Desktop/mobile version via Tauri

- Worker queue for pre calculating and notifications. Different queue/worker than previous mojo work not redis/sidekiq/bullmq

- Automated LLMs scrapping

Thoughts

We should have a very clear privacy policy

Landing on the page should engage the user with data driven from form. This can lead towards creating an account to provide more specific information on a schedule.

Dog Fooding. Plugging in "Software Engineer" + "Remote" + "No standing desk" = "make sure to walk around every hour"

Living in downtown Manhatten on a Sunday morning, "be careful slicing the bagel this morning. X% of ER visits from from bagel slices today"

Week before day light savings time, "start shifting your schedule by 15 minutes earlier/later. heart attacks and car crashes increase after day light savings time due to sleep deprivation"

Needs to be friendly and not anxiety inducing. Carson mentioned the Citizen app as the antithesis.

Wireframe

Investigation

- Rhode Island

- Death Records

- Injury Surveillance Data

- Hospital Discharge Data

- Note: the request form seems to require $100 per data file and year processing fee. ~$2000 + processing time

- Kurzgesagt Sources

- The cause-of-death data have been extracted from WHO’s Mortality Database using the Cause of Death Explorer, and extracting the data for our demographic for each death cause mentioned in the video, as well as the total deaths from all causes in our demographic to calculate proportions.

- Please keep in mind the following considerations on this data:

- Stadiums of similar capacity include the Allianz Arena in Munich or the Empower Field in Denver.

- Empower Field: “About us” (retrieved 2024)

- World Health Organization, European region: “SDR(15-29), All causes, per 100 000” (retrieved 2024)

- Our World in Data: “Annual death rate by age group, United States, 2021”

- Our World in Data: “Terrorism deaths rate, 2011 to 2021 (retrieved 2024)

- A Westman et al. Parachuting from fixed objects: descriptive study of 106 fatal events in BASE jumping 1981–2006. Br J Sports Med. 2008

- Mei-Dan, Omer et al. (2013): “Fatalities in Wingsuit BASE Jumping” Wilderness & Environmental Medicine, vol. 24, 4, 321-327.

- U.S. department of energy: “Average Annual Vehicle Miles Traveled per Vehicle by Major Vehicle Category” (retrieved 2024)

- United States Department of Transportation: “Motorcycle Safety”

- World Health Organization Mortality Database: “Causes of Death Explorer: Road traffic accidents”

- Hunter, Luke (2020): “The Cheetah’s Speed Limit” Wild View, Wildlife Conservation Society

- International Council on Clean Transportation (2022): “European Market vehicle statistics”

- U.S. Environmental Protection Agency Automotive Trends Report (2023): “Automotive Trends Report“

- National Park Service U.S.: “The statue of Liberty” (retrieved 2024)

- Petrof: “What is the size of the grand piano and the upright piano?” (retrieved 2024)

- Royal Society for the Prevention of Accidents (2018): “Road Safety Factsheet”

- Quote: “Inappropriate speed contributes to around 11% of all injury collisions reported to the police, 15% of crashes resulting in a serious injury and 24% of collisions that result in a death. This includes both ‘excessive speed’, when the speed limit is exceeded but also driving or riding within the speed limit when this is too fast for the conditions at the time (for example, in poor weather, poor visibility or high pedestrian activity).”

- World Health Organization: “Managing speed” (2017)

- Perez, Miguel A. et al (2021): “Factors modifying the likelihood of speeding behaviors based on naturalistic driving data”, Accident Analysis & Prevention, vol. 159, 106267

- World Health Organization (2023): “Road traffic injuries”

- National Highway Traffic Safety Administration (2020): “Traffic Safety Facts: Young Drivers”

- World Health Organization (2019): “SAFER: Advance and enforce drink driving counter measures”

- European Comission (2023): “New report from the European Road Safety Observatory: focus on drink driving”

- European Comission (2022): “Road Safety Thematic Report – Driver distraction”

- National Highway Traffic Safety Administration (2021): “Distracted Driving 2019”

- Australian Automobile Association: “Distracted driving” (retrieved 2024)

- IFAB: "The Field of Play" (retrieved 2024)

- National Highway Traffic Safety Administration (2020): “Traffic Safety Facts: Young Drivers”

- European Transport Safety Council (2006): “Seat Belt Reminders: Implementing advanced safety technology in Europe’s cars”

- U.S. Centers for Disease Control and Prevention (2024): “Leading Causes of Nonfatal Emergency Department Visits”

- U.S. Census bureau: “National Population by Characteristics: 2020-2023” (retrieved 2024)

- World Health Organization Mortality Database: “Causes of Death Explorer: Falls”

- World Health Organization Mortality Database: “Causes of Death Explorer: Drownings”

- Armstrong, Erika J.; Erskine, Kevin L (2018): “Investigation of Drowning Deaths: A Practical Review”

- U.S. Centers for Disease Control and Prevention (2024): “Drowning Facts”

- U.S. Centers for Disease Control and Prevention (2024):“WISQARS Leading Causes of Death Visualization Tool: Ages 5 to 14”

- — Also for the love of god, be careful on cruise ships – if you fall into the water you have a 60% chance of dying.

- Gönel, Orhan; Çiçek, İsmail (2020): “Statistical analysis of Man Overboard (MOB) incidents”. Chapter in “Engineering and Architecture Sciences: Theory, Current Research and New Trends”, edited by Dr. Emine Yildiz Kuyrikçu . IVPE Publisher.

- World Health Organization Mortality Database: “Causes of Death Explorer: Self-inflicted injuries”

- Cooper, Jessica; Appleby, Louise; Amos, T. (2002): “Life events preceding suicide by young people” Social psychiatry and psychiatric epidemiology, vol. 37, 6, 271–275

- Liu, Richard T.; Miller, Ivan (2014): “Life events and suicidal ideation and behavior: A systematic review” Clinical Psychology Review, vol. 34, 3, 181-192

- Bader, S. et al. (2021): “Warning signs of suicide attempts and risk of suicidal recurrence” European Psychiatry, vol. 64

- Kim, Eun Ji et al. (2022): “Comparing warning signs of suicide between suicide decedents with depression and those non-diagnosed psychiatric disorders” Suicide Life Threat Behavior, vol. 52, 178-189

- Harvard T.H. Chan School of Public Health: “Attempters’ Longterm Survival”, 2002

- World Health Organization Mortality Database: “Causes of Death Explorer: Malignant Neoplasms”

- National Cancer Institute: “Adolescents and Young Adults with Cancer”

- Trama, Annalisa et al. (2023): “Cancer burden in adolescents and young adults in Europe”, European Society for Medical Oncology, vol. 8,1

- Everyone should know how their breasts normally look and feel and report any changes to a health care provider right away. It is recommended to perform a breast self-exam once a month:

- If you have testicles and have already undergone puberty, some doctors also recommend a monthly testicular self-exam to get to know what's normal for you and to be able to report any changes to your healthcare provider without delay:

- Testicular Cancer Awareness foundation: “Monthly testicular self-exam” (retrieved 2024) https://www.testicularcancerawarenessfoundation.org/self-exam-resources

- Though not customarily recommended due to the risk of false positives, it is possible to perform a thyroid self-examination. However, keep in mind that the thyroid gland may appear enlarged or bumps on your neck may appear for many reasons other than cancer. #American Association of Clinical Endocrinology: “How to check your thyroid” (retrieved 2024) https://www.aace.com/disease-and-conditions/thyroid/how-check-your-thyroid

- United States

- United Kingdom

- https://www.cancerresearchuk.org/health-professional/cancer-screening

- Canadahttps://cancer.ca/en/cancer-information/find-cancer-early/screening-for-cancer

- Australiahttps://screeningresources.cancervic.org.au/sections/national-cancer-screening-programs

- American Cancer Society: “Can Melanoma Skin Cancer Be Prevented?” (retrieved 2024)

- U.S. Centers for Disease Control and Prevention (2023): “Reducing Risk for Skin Cancer”

- Melanoma Institute Australia: “How to Prevent Melanoma” (retrieved 2024)

- World Health Organization Mortality Database: “Causes of Death Explorer: Malignant Skin Melanoma”

Legacy

Helios

Helios is our weather/welcome display for the Providence and Boulder front door. It serves as an opportunity to welcome guests and show off some our technical expertise.

- Front-end

- react: https://reactjs.org/

- apollo client: https://www.apollographql.com/docs/react/

- ramdajs: https://ramdajs.com/

- matter.js: http://brm.io/matter-js/

- Back-end

- ruby on rails: https://rubyonrails.org/

- graphql-ruby: https://github.com/rmosolgo/graphql-ruby

- postgresql: https://www.postgresql.org/

- redis: https://redis.io/

- APIs

- graphql: https://graphql.org/

- docker: https://www.docker.com/

StandupHub

StandupHub is a web service to easily track your tasks and their statuses for standup.

Stack

- PT issues: https://www.pivotaltracker.com/n/projects/1553257

- Slack: #standuphub

- Front-end

- github: https://github.com/mojotech/standup-web

- react 16: https://reactjs.org/

- redux: https://redux.js.org/

- Back-end

- github: https://github.com/mojotech/standup-api-ex

- Phoenix: https://www.phoenixframework.org/

- Elixir: https://elixir-lang.org/

- Back-end (legacy)

- docker: https://www.docker.com/

Startup

cd standuphub-api-ex

# start a pgsl instance on localhost:5432. You can use an OS service instead of docker

docker-compose up db

# start the phoenix server on localhost:4000 connected to localhost for database

mix phx.server

cd ../standuphub-web

# start webpack server on localhost:3000 pointed to the local phoenix server the API server

REACT_APP_API_SERVER=http://localhost:4000 yarn start